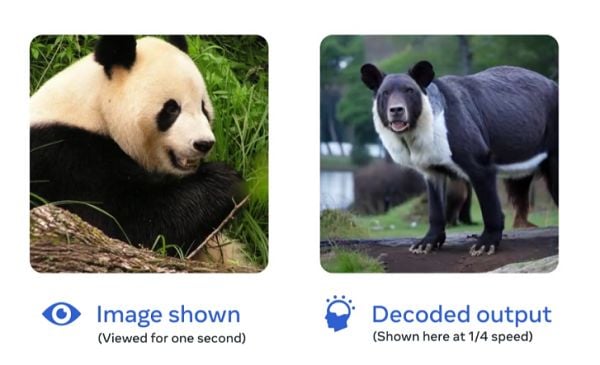

Meta’s AI advances are getting a little more creepy, with its latest project claiming to be able to translate how the human brain perceives visual inputs, with a view to simulating human-like thinking.

In its new AI research paper, Meta outlines its initial “Brain Decoding” process, which aims to simulate neuron activity, and understand how humans think.

As per Meta:

“This AI system can be deployed in real time to reconstruct, from brain activity, the images perceived and processed by the brain at each instant. This opens up an important avenue to help the scientific community understand how images are represented in the brain, and then used as foundations of human intelligence.”

Which is a bit unsettling in itself, but Meta goes further:

“The image encoder builds a rich set of representations of the image independently of the brain. The brain encoder then learns to align MEG signals to these image embeddings […] The artificial neurons in the algorithm tend to be activated similarly to the physical neurons of the brain in response to the same image.”

So, the system is designed to think how humans think, in order to come up with more human-like responses. Which makes sense, as that is the ideal aim of these more advanced AI systems. But reading how Meta sets these out just seems a little disconcerting, especially with respect to how they may be able to simulate human-like brain activity.

“Overall, our results show that MEG can be used to decipher, with millisecond precision, the rise of complex representations generated in the brain. More generally, this research strengthens Meta’s long-term research initiative to understand the foundations of human intelligence.”

I mean, that’s the end game of AI research, right? To recreate the human brain in digital form, enabling more lifelike, engaging experiences that replicate human response and activity.

It just feels a little too sci-fi, like we’re moving into Terminator territory, with computers that will increasingly interact with you the way that humans do. Which, of course, we already are, through conversational AI tools that can chat to you and “understand” added context. But further aligning computer chips with neurons is another big step.

Meta says that the project could have implications for brain injury patients and people who’ve lost the ability to speak, providing all new ways to interact with people who are otherwise locked inside their body.

Which would be amazing, while Meta’s also developing other technologies that would enable brain response to drive digital interaction.

That project has been in discussion since 2017, and while Meta has stepped back from its initial brain implant approach, it has been using this same MEG (magnetoencephalography) tracking to map brain activity in its more recent mind-reading projects.

So Meta, which has a long history of misusing, or facilitating the misuse of user data, reading your mind. All for good purpose, no doubt.

The implications of such are amazing, but again, it is a little unnerving to see terms like “brain encoder” in a research paper.

But again, that is the logical conclusion of advanced AI research, and it seems inevitable that we will soon see even more AI applications that more closely replicate human response and engagement.

It’s a bit weird, but the technology is advancing quickly.

You can read Meta’s latest AI research paper here.